Wordcount Mapreduce Program

The post has been successfully mailed. Email this Post 7.8K. The quantity of digital data generated every day is growing exponentially with the advent of Digital Media, Internet of Things among other developments. This scenario has given rise to challenges in creating next generation tools and technologies to store and manipulate these data.

Mac os x lion 10.8. This game is the first from Tekken series which makes use Unreal Engine. The game is focused on one to one battles and is set to be the last in the Tekken series.

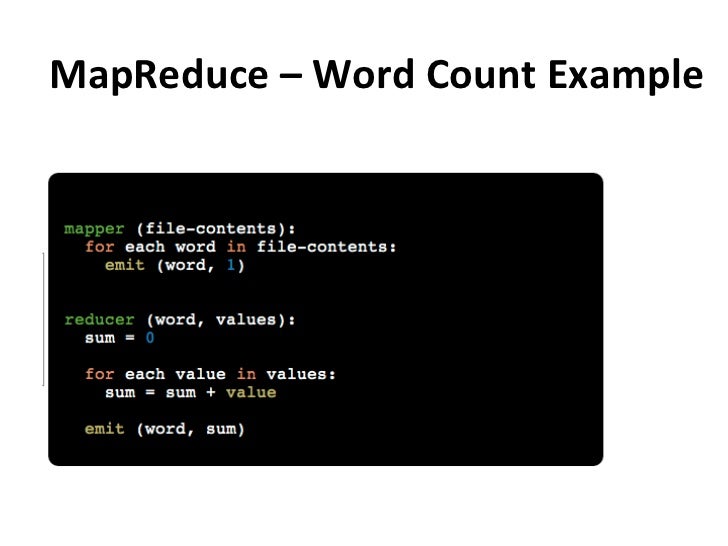

WordCount(HelloWorld) MapReduce program. Splitting the content into words and then it writes every word into output and sets frequency count for that word to 1.

This is where Hadoop Streaming comes in! Given below is a graph which depicts the growth of data generated annually in the world from 2013. IDC estimates that the amount of data created annually will reach 180 Zettabytes in 2025! Source: IDC IBM states that, every day, almost 2.5 quintillion bytes of data are created, with 90 percent of world’s data created in the last two years! It is a challenging task to store such an expansive amount of data. Hadoop can handle large volumes of structured and unstructured data more efficiently than the traditional enterprise Data Warehouse. It stores these enormous data sets across distributed clusters of computers.

Hadoop Streaming uses MapReduce framework which can be used to write applications to process humongous amounts of data. Since MapReduce framework is based on Java, you might be wondering how a developer can work on it if he/ she does not have experience in Java. Well, developers can write mapper/Reducer application using their preferred language and without having much knowledge of Java, using Hadoop Streaming rather than switching to new tools or technologies like Pig and Hive. What is Hadoop Streaming? Hadoop Streaming is a utility that comes with the Hadoop distribution. It can be used to execute programs for big data analysis.

Hadoop streaming can be performed using languages like Python, Java, PHP, Scala, Perl, UNIX, and many more. The utility allows us to create and run Map/Reduce jobs with any executable or script as the mapper and/or the reducer.

Mapreduce Example

WordCount Example WordCount example reads text files and counts how often words occur. The input is text files and the output is text files, each line of which contains a word and the count of how often it occured, separated by a tab. Each mapper takes a line as input and breaks it into words. It then emits a key/value pair of the word and 1.

Mapreduce Word Count Example Java

Each reducer sums the counts for each word and emits a single key/value with the word and sum. As an optimization, the reducer is also used as a combiner on the map outputs. This reduces the amount of data sent across the network by combining each word into a single record. To run the example, the command syntax is bin/hadoop jar hadoop-.-examples.jar wordcount -m -r All of the files in the input directory (called in-dir in the command line above) are read and the counts of words in the input are written to the output directory (called out-dir above).

Word Count Map Reduce

It is assumed that both inputs and outputs are stored in HDFS (see ). If your input is not already in HDFS, but is rather in a local file system somewhere, you need to copy the data into HDFS using a command like this: bin/hadoop dfs -mkdir bin/hadoop dfs -copyFromLocal As of version 0.17.2.1, you only need to run a command like this: bin/hadoop dfs -copyFromLocal Word count supports generic options: see Below is the standard wordcount example implemented in Java.

Comments are closed.